Dexter Ong

Projects

Some of the fun and exciting projects that I've worked on.

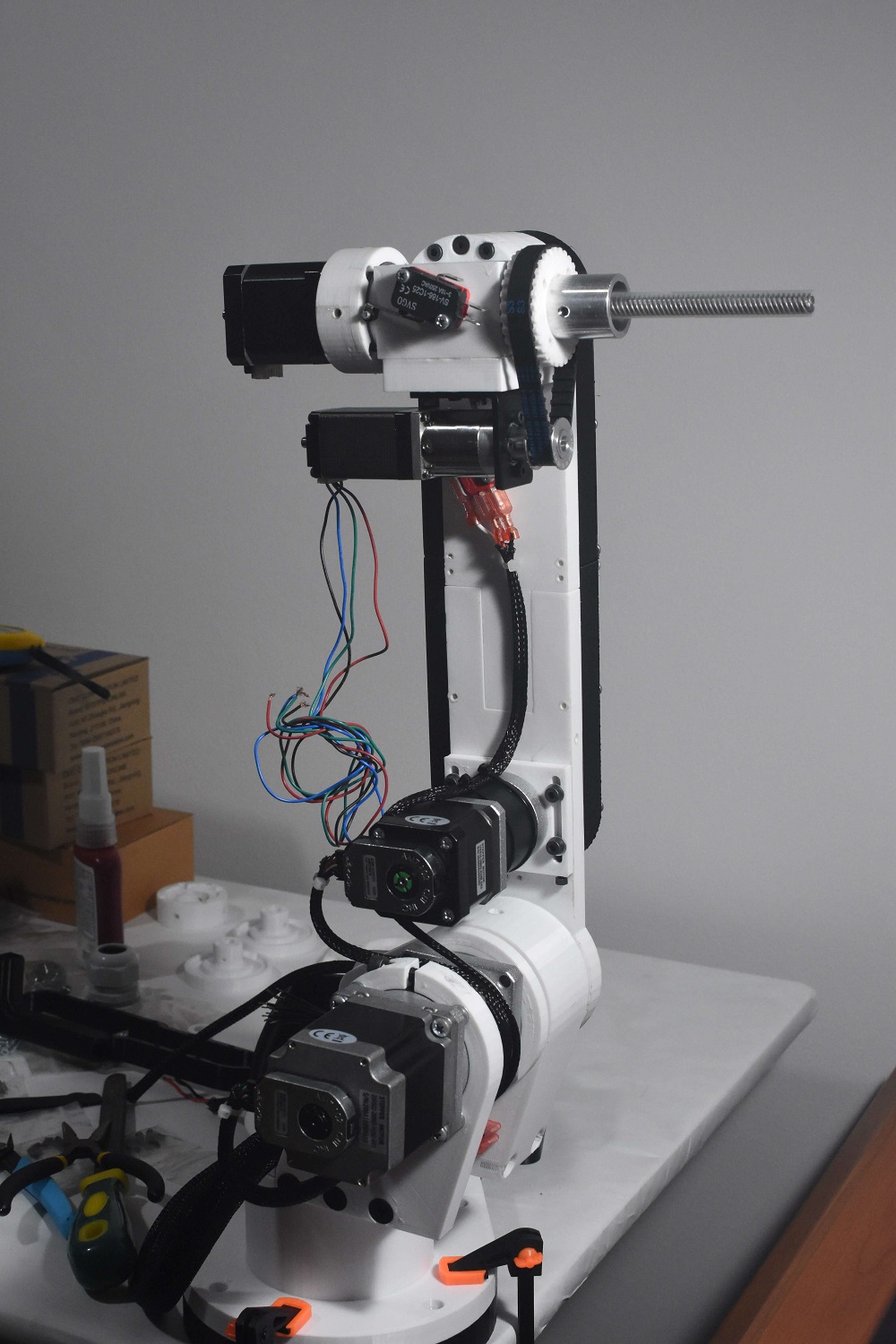

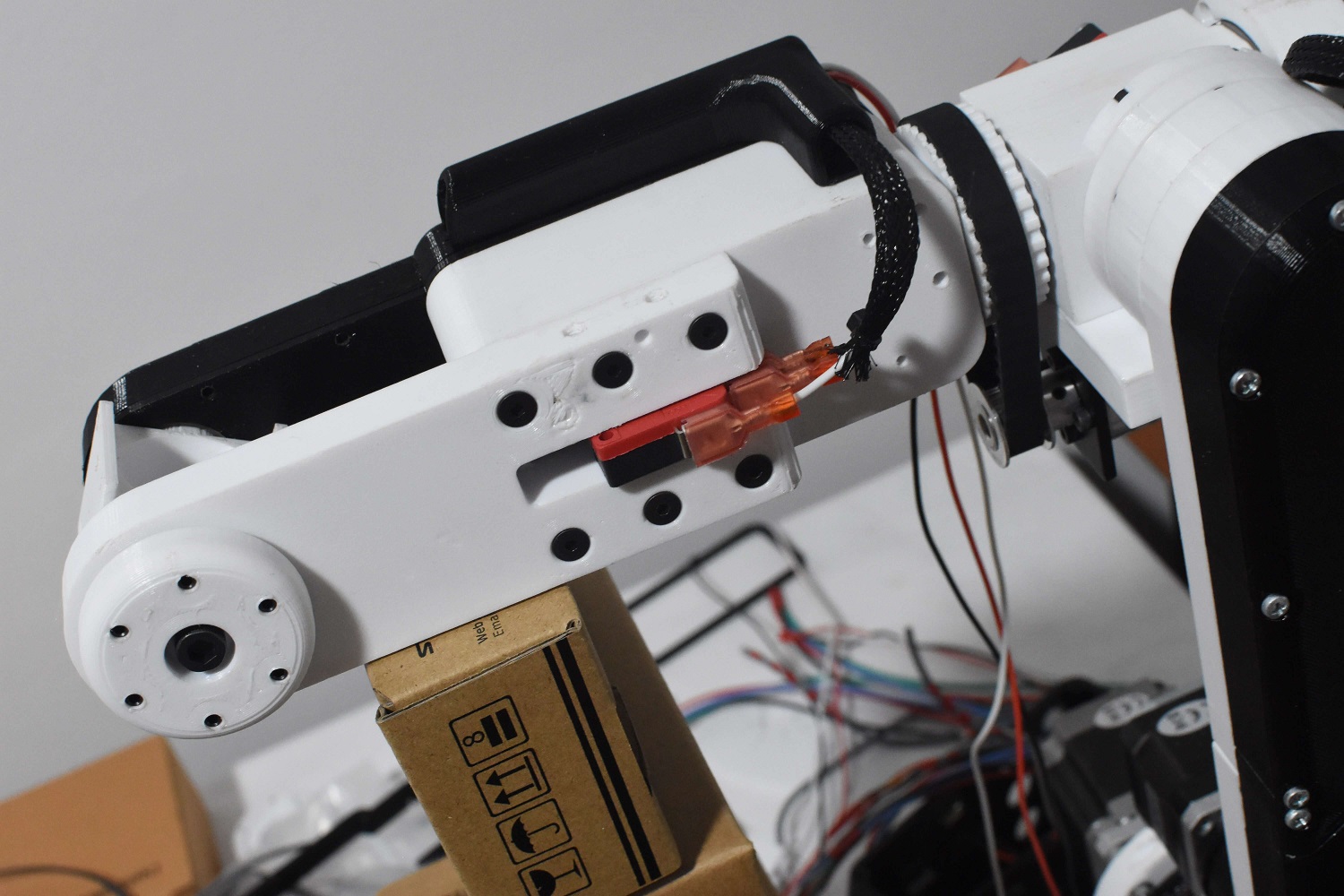

3D Printed 6-DoF Robotic Arm

I have always found robotic arms and biomimetic robots fascinating because of how they are inspired by biological designs from nature. While most robotic manipulators aren't necessarily modelled to replicate the human arm, they have the potential to perform everyday tasks and work alongside us as collaborative robots. With this arm I plan to explore some interesting concepts that I have in mind for on-arm vision, grippers and human-robot interaction. I actively share my work in an effort to contribute to the maker community.

Proximity Sensor

Proximity Sensor

Capacitive proximity sensors provide a simple and low-cost solution for detecting a nearby hand.

Compared to torque-based approaches that require contact, a proximity sensor enables more complex interactions with the user for collaborative work.

For instance, when the user needs to operate in close proximity of the arm, the speed of the arm can be adjusted accordingly.

A safety stop is only triggered when there is direct contact.

This was achieved with a DIY capacitive plate (copper tape and aluminium foil) in the shell of the arm.

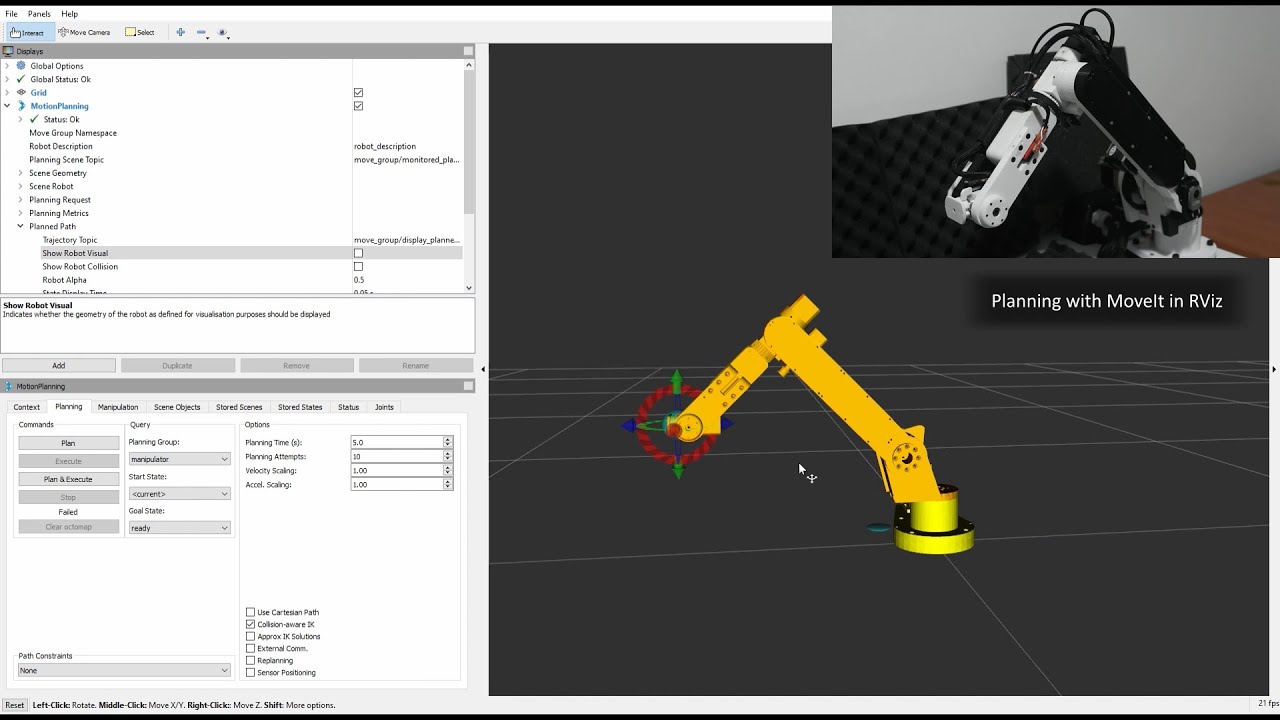

ROS and MoveIt

ROS and MoveIt

I managed to get the arm set up with ROS and MoveIt. Code is available here.

I also designed some adapters to mount the encoders directly on some of the joints.

I was struggling with significant play and backlash in the joints, which was to be expected with 3D printed parts.

Using joint encoders was the best way to get accurate measurements of the joints positions for motion planning.

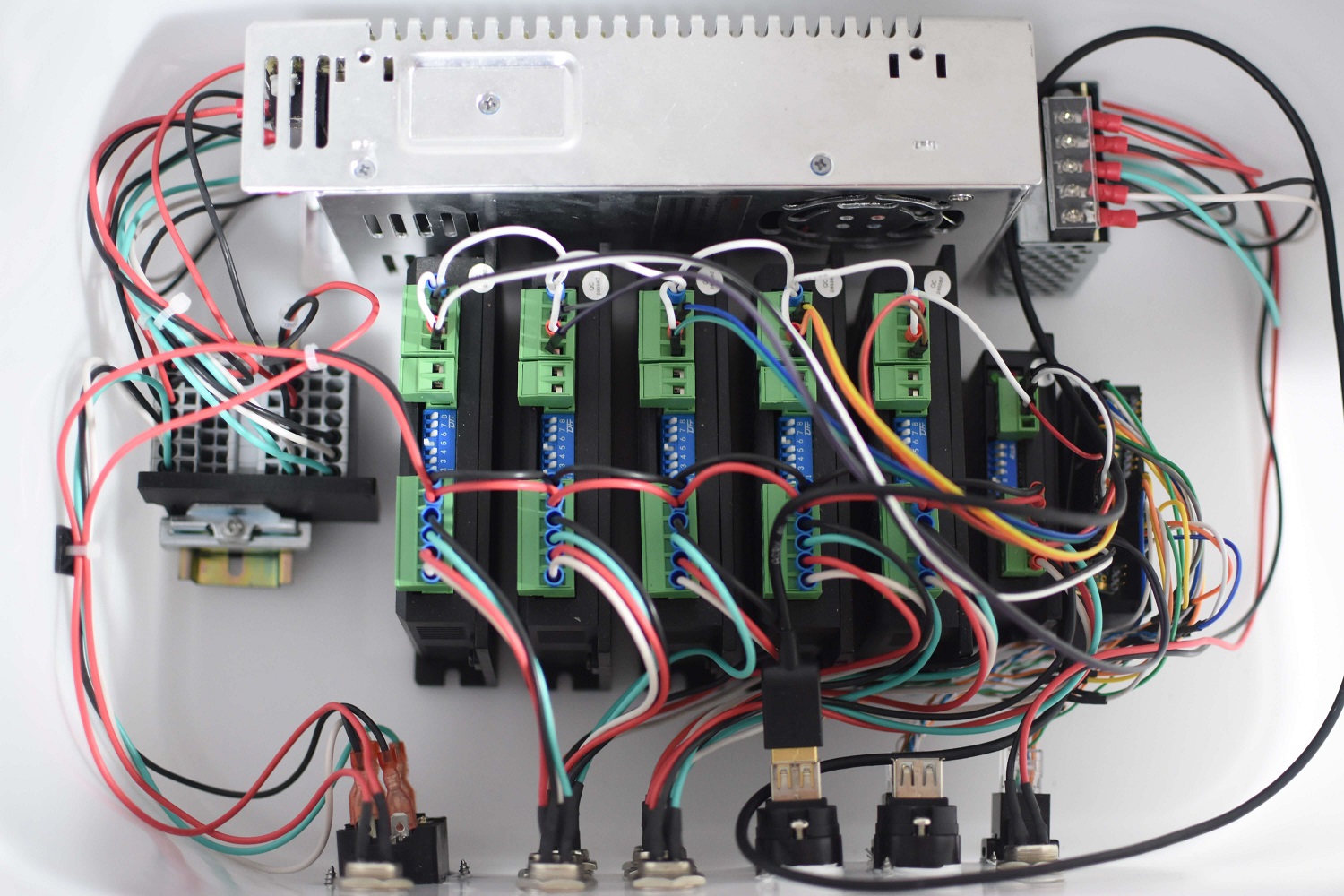

Build Log

Build Log

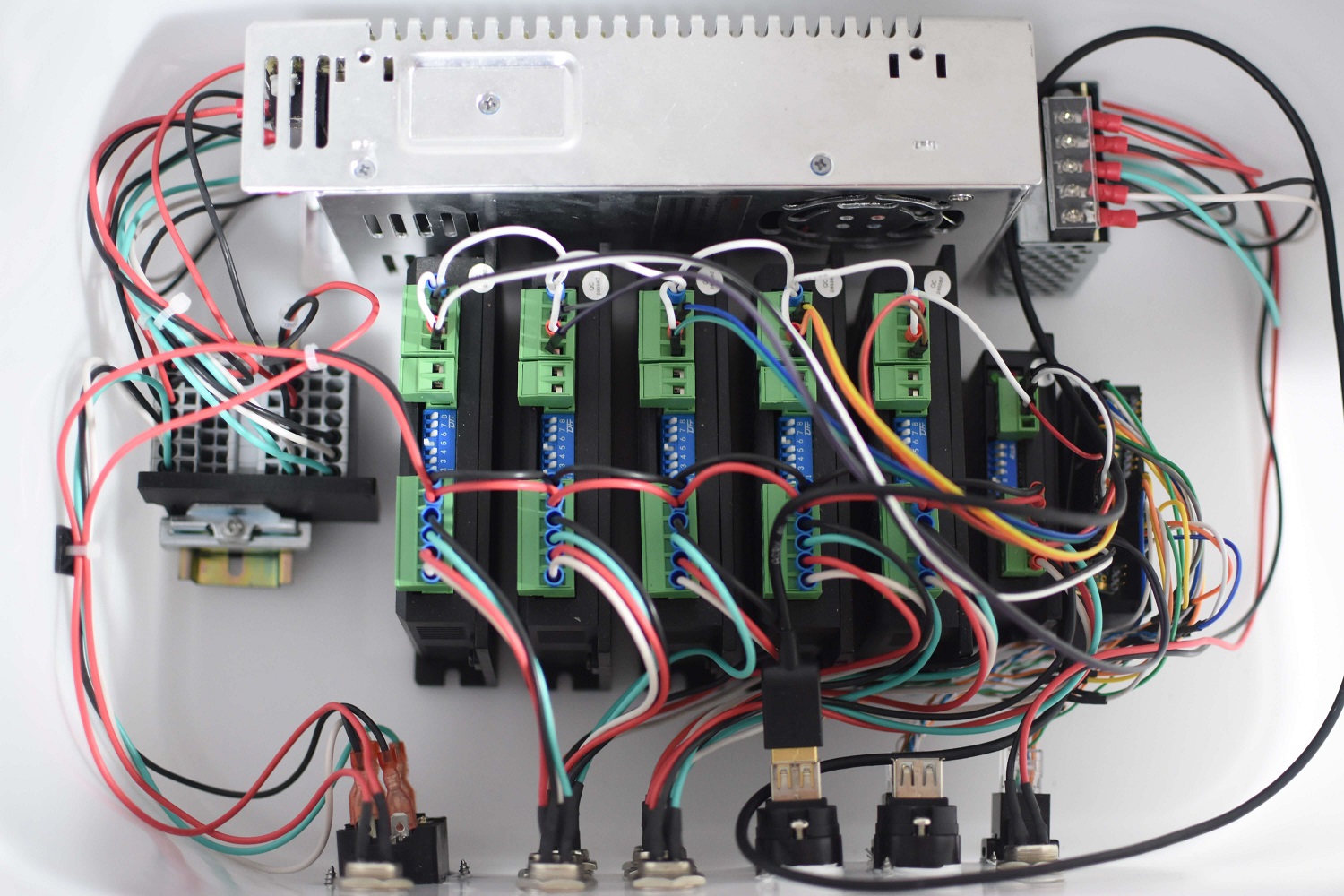

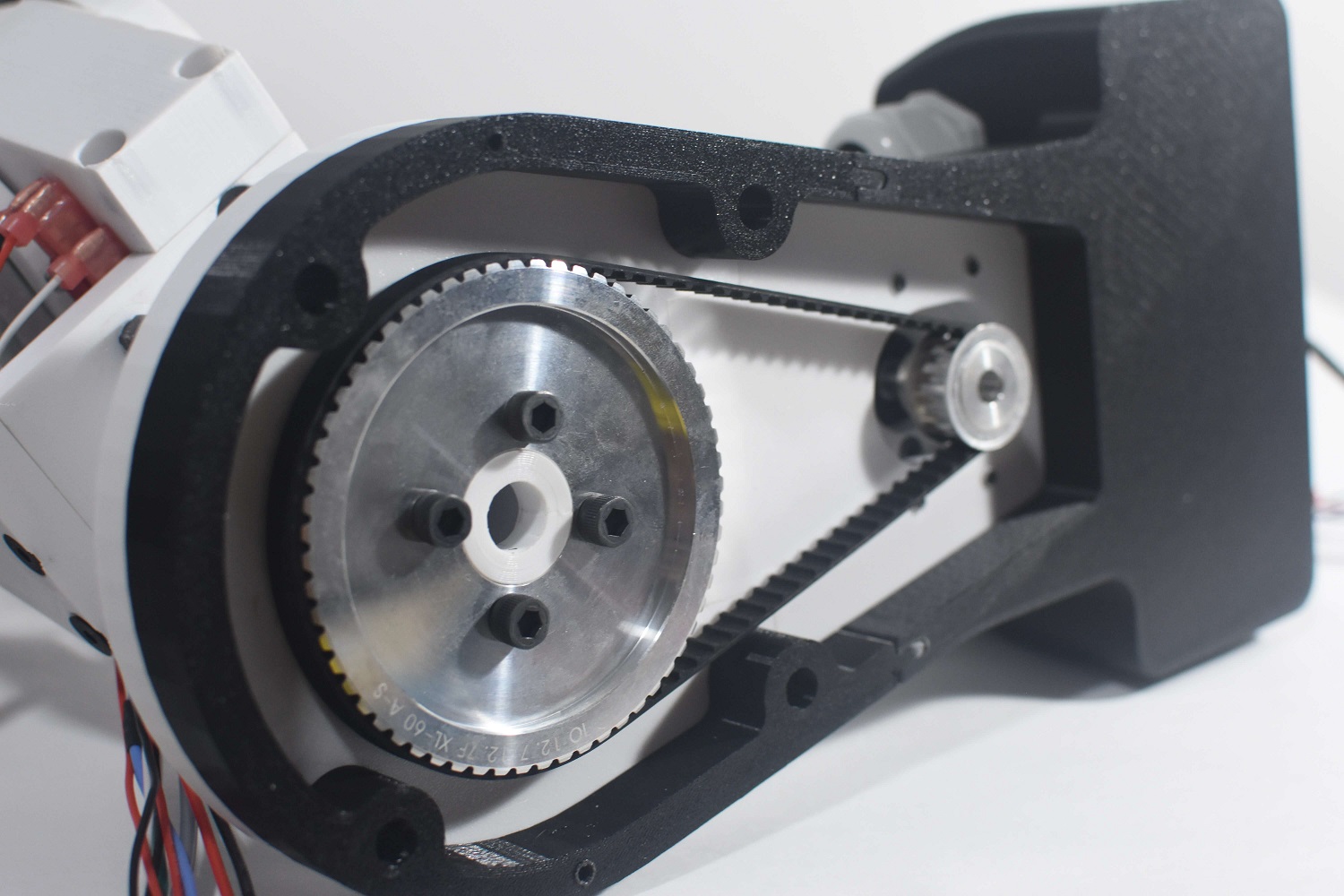

Aside from some components like belts, sprockets and bearings, most of the arm is 3D printed. It made for an interesting 3D printing project

in which I had to modify parts and tolerances along with exploring different materials. As a result of using 3D printed parts,

there is a fair amount of flexing and slip in the joints in addition to the backlash from the gearboxes. This was to be expected and I chose to

go ahead with it because I also hope to investigate vision-based methods to account for these inaccuracies, and to explore making low-cost robotic arms more viable

and accessible. Along the way, I have also made other hardware modifications to work around this such as joint position encoders and adding and

repositioning inductive limit switches.

The AR3 is an open source design from Annin Robotics where I am also sharing my work to contribute to this great project.

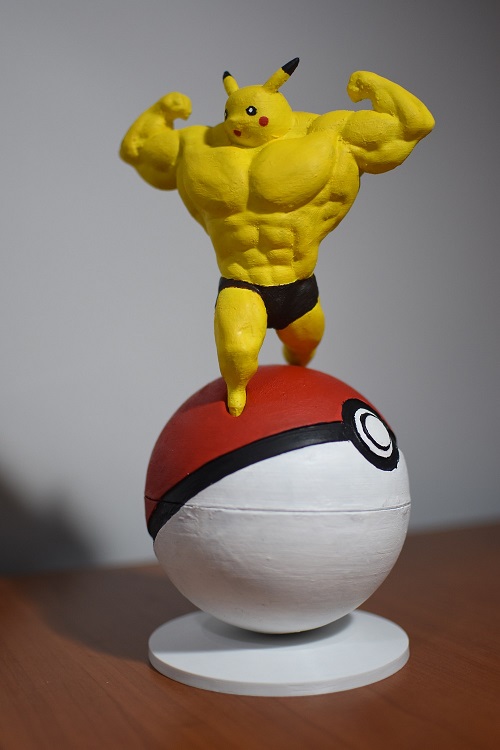

Wobbling Ultra Swole Pikachu

The most unnecessary but also the most necessary thing I've ever made.

Dynamic Object Tracking and Classification

Using a Multi-Camera System

B.Eng. Dissertation. This paper presents an approach to class-agnostic detection and tracking of objects using scene flow with fisheye cameras. The object detection model is fine-tuned on a local dataset. Depth values are obtained using plane-sweeping stereo matching. Points from scene flow are clustered to initialise and track targets across frames. Class results from 2D object detection are used to assign labels to existing tracks. Since points across the entire scene flow are considered to initialise tracks without detection priors, unknown object types can be detected and tracked as well.

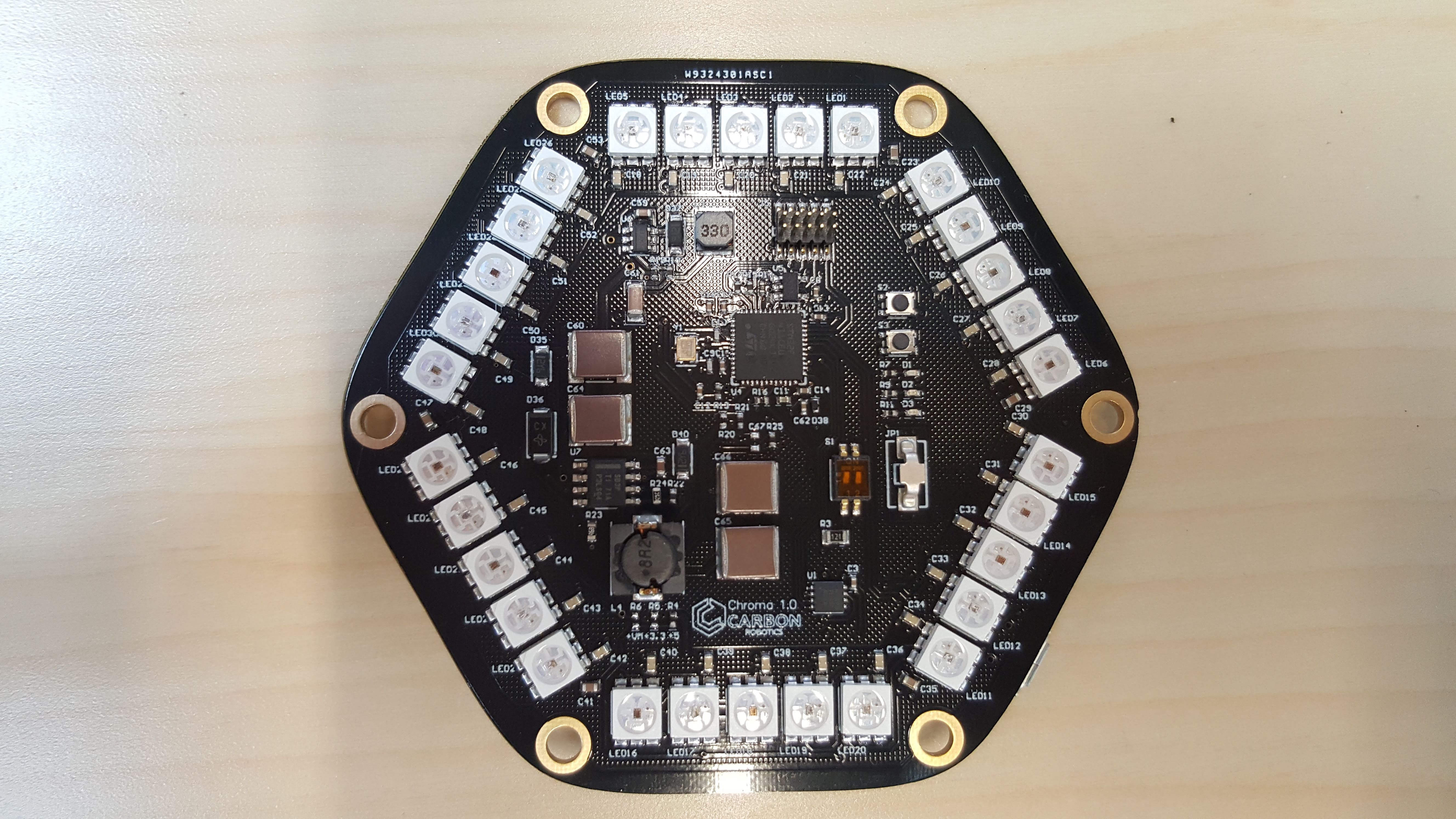

Robotic Arm Elbow LED

Implemented the elbow LED for the arm as one of my projects during my internship with Carbon Robotics. Designed and integrated the PCB and wrote the firmware for the STM32F4 MCU to allow interfacing with the arm motor controllers for programmable behaviours.

LiDAR Range Image-based Segmentation

Using range images from LiDAR data for segmentation of point clouds. Bounding boxes are drawn around segmented objects.

Smart Window System

Proof of concept of a smart window system. Built with minimal OTS components, the system runs off a pi and various sensor circuits, and includes cloud and mobile app integration. The window is driven by a BLDC motor. Self-calibration of the window position using an optical tachometer is shown in the video.